Physical AI integrates software intelligence with physical systems to enable machines that perceive, decide, and act autonomously and collaboratively with humans. Over the years, we’ve witnessed the development of Digital AI—systems that think, analyze, and predict in the digital world. Now we are seeing a significant shift from Digital AI to Physical AI, where physical Precision is driven by Software Intelligence to real-time interaction and adaptation within physical environments.

Physical AI spans a vast landscape, powering everything from Autonomous self-driving systems to human-assistive robots. For this article, the focus is on its industrial core: the application of AI-driven intelligence within modern industrial manufacturing systems across the enterprise.

The aim is to present these concepts in a simplified manner to make it understandable.

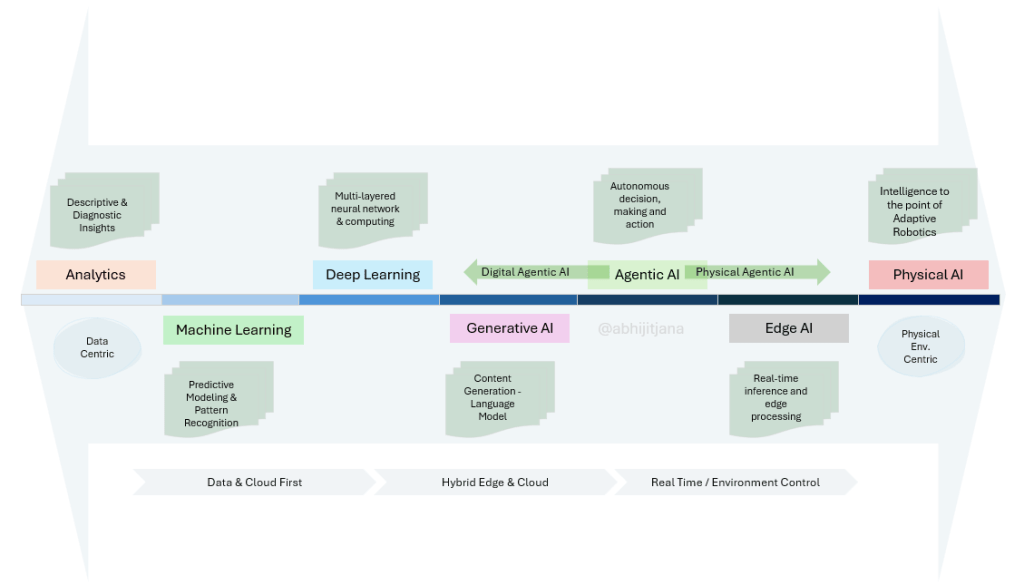

The Journey from Digital AI to Physical AI

Digital AI has been fundamentally rooted in data and has predominantly operated within the cloud. Its strengths lie in analyzing vast amounts of information, predicting future outcomes, and optimizing digital workflows. Examples include machine learning models that forecast demand or identify anomalies in production data, as well as Generative AI tools capable of designing new images or writing code.

However, in sectors such as manufacturing, logistics, automotive, and healthcare, the true value emerges on the operational floor. Physical AI moves intelligence to the point of action—operating at the edge, within moving machines and robots. These systems are equipped to sense their surroundings, make decisions, and adapt in real time.

Evolution of Industrial Manufacturing Automation

As we focus on understanding the fundamentals of Physical AI within Industrial Manufacturing Automation, it is helpful to clarify the background and provide a clear view of the automation stack.

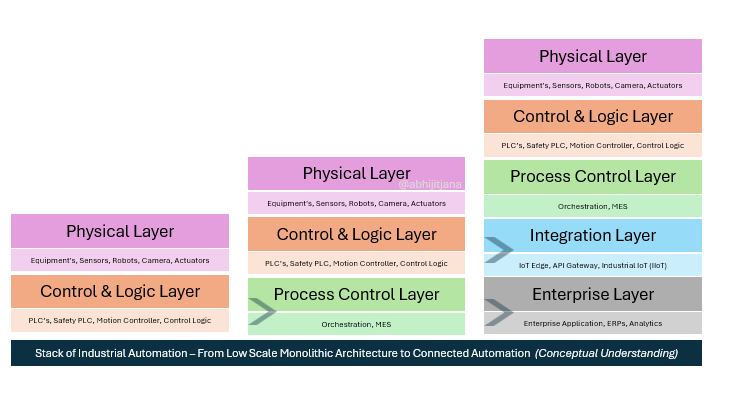

Over the years, industrial automation has experienced significant transformation. Initially, automation was hardware-centric, characterized by a monolithic approach designed for small-scale operations. In this model, systems performed a fixed set of operations without the involvement of any external subsystems.

With time, the industry progressed toward integrated and connected automation. This shift was marked by the introduction of integration layers and enterprise layers, enabling end-to-end connectivity, seamless data exchange, enhanced traceability, and real-time monitoring across processes. These advancements allowed for greater flexibility and efficiency in managing industrial operations.

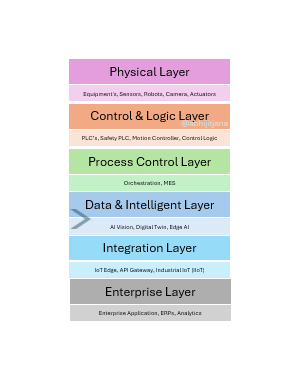

The latest phase in this evolution has been driven by advances in data and emerging technologies. Innovations such as AI-powered computer vision, digital twins, and edge processing have made high-performance computing and real-time feedback possible.

These represent the early applications of Physical AI, where AI-driven decisions directly influence physical outcomes on the manufacturing floor.

Understanding Physical AI

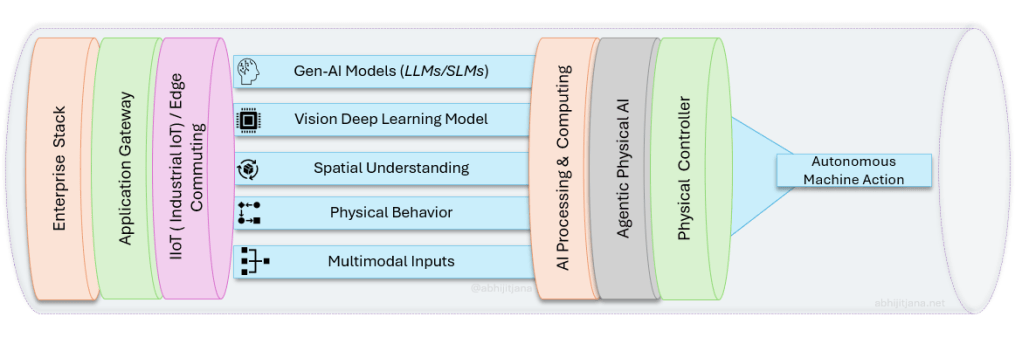

Physical AI is the integration of artificial intelligence with physical systems that interact directly with their environments. Rather than being a single system, Physical AI is composed of a stack of interconnected intelligence layers. At its core, Physical AI brings together machine learning, natural language processing (NLP), and computer vision technologies with physical controllers, such as programmable logic controllers (PLCs). These controllers manage the operation of robots, sensors, cameras, actuators, and other equipment on the manufacturing floor.

Core Layers of Physical AI

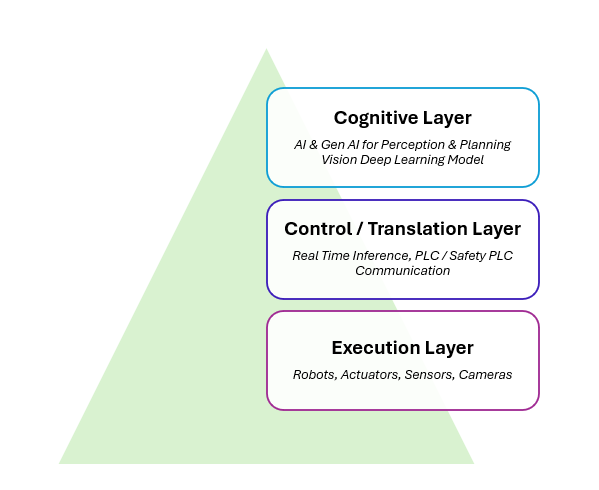

Physical AI operates through three essential layers of intelligence at the edge. While enterprise and integration layers are important for ensuring end-to-end operations, these three edge layers are fundamental to the functioning of Physical AI.

- Cognitive Layer: This acts as the brain of Physical AI. It is responsible for perception, prediction, and planning, leveraging outputs from language models (LLMs / SLMs) and vision models.

- Control / Translation Layer: The feedback and outcomes generated by the cognitive layer are translated into actionable machine instructions.

- Execution Layer: These instructions are executed at the point of action by robots or other physical components, ensuring the system responds effectively to real-world conditions.

Through this layered approach, Physical AI enables intelligent, adaptive, and real-time control of industrial systems, bridging the gap between digital intelligence and physical action.

Foundation Working Principle of Physical AI

Physical AI advances the capabilities of traditional physical processing systems by integrating generative AI, Computer Vision with a deeper understanding of spatial mapping and the behavior of objects in the three-dimensional world.

Unlike conventional automation, which may operate on predetermined routines, Physical AI is designed to process and interpret a wide range of input types—such as images, videos, text, speech, and sensor data from the real environment. By combining these multimodal inputs, Physical AI can analyze physical spaces and dynamic conditions in real time.

Through this integration, Physical AI transforms raw data from multiple sources into actionable outputs. This means that the system not only perceives and interprets its surroundings but also adapts its actions based on those insights, resulting in more intelligent and flexible interactions with physical environments.

Simplified Use Case of Physical AI

To better understand how Physical AI works in practice, consider the scenario of a robotic arm tasked with placing toys into a box.

In Traditional Manufacturing

- Logic: It follows a rigid path- It moves to coordinate (x1, y1) picks up an object, and place to coordinate (x2, y2)

- Problem: If a toy is slightly tilted or the box is not aligned by few inches to the left, the operation will fail, and Operator intervening is needed to reposition and reset.

With Physical AI – Physical Precision + AI + LLM + Agentic AI

Robotics are enhanced with computer vision and deep learning technology.

- Logic: A camera scans the area. Instead of following a fixed script, the AI Deep Learning Model calculates a new path for every single toy.

- Success: This adaptive path calculation capability allows the robotic arm to recognize toys even if they are not perfectly positioned and to adjust its grip accordingly to pick them up correctly.

Furthermore, when we integrate Agentic AI and Large Language Models (LLMs) the robot’s abilities moves to understanding its job and solving problems on its own.

For instance, if production switch to handling fragile glass toys, instead of rewriting code, the operator can simply provide natural instructions such as, “Switch to ‘Fragile Glass toys’ today. Pick them up 60% slower, use a soft grip, and ensure the box has a red fragile sticker on the side.“

The LLM then translates these instructions into the precise commands needed for the robot to perform the task, allowing for greater flexibility and ease of operation.

- Product Type = ‘Fragile Glass Toys’

- Robot Speed = 40%

- Tool Changer = Active

- Gripper Change = “Soft Grip”

- Fragile Vision Scan = Active

When we integrate Agentic AI, it doesn’t just follow a path; it pursues a goal. If the robot encounters a problem, like a toy being stuck in the dispenser, it doesn’t just stop and error out. The Agentic AI performs a “Reasoning Loop“:

- Observe: “tried to grab the toy >>> but it’s still stuck.” – “Robot Tried to Grab the Toys Multiple Time“

- Reason: “If I keep pulling, I might break it. I should try to nudge it from the side first.” – “Robot Tried to nudge the toys from side“

- Act: It independently tries a different move to twist the toy free and then move forward with packing – “Robot free the toys and move forward with packaging“

- Communicate: It raises an alarm to operator if it could not solve the problem and need operator intervention or send a message to operator along with Image – “I successfully cleared a jam in the dispenser by adjusting my grip angle. No human help was needed.”

- Human Calibration: Operator can later look at the details of images and logs to understand why dispense was jam and take necessary precautions.

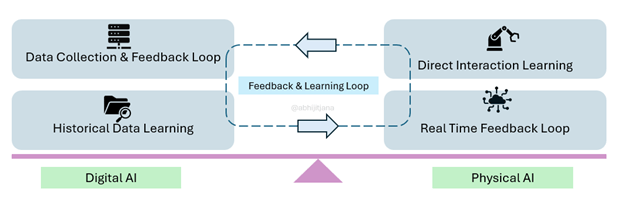

Feedback & Learning – Digital AI + Physical AI

Physical AI learns through direct, real-time interaction with its environment, adapting continuously via feedback from sensors and actions performed within safety limits. In contrast, Digital AI relies on historical data to inform models and decisions, cycling through data collection, analysis, and action.

In enterprise manufacturing, it is imperative, that both types work together: digital systems like defect detection adjust to new conditions at the edge, while physical systems such as assembly arms refine parameters based on sensor input. This combination leverages both past insights and real-time experience for robust, adaptive decision-making.

Purpose, Quality, and Responsible Innovation

As AI shifts into the physical world, the consequences become real. Innovation must focus on purpose and quality.

AI leaders must ensure:

- AI decisions are auditable and explainable.

- Safety is built in by design (e.g., force limits, safety zones, certified stops).

- AI Models are versioned, tested, and reversible like any validated control.

Closing Reflection

Over the last decade, advances in artificial intelligence have focused on training systems to better understand our world. This period was defined by gathering data, developing models, and refining algorithms to interpret information and drive digital decision-making. Now, the next era of AI is emerging—one in which AI is not only understanding but also interacting with the physical world. This transition brings new opportunities and challenges, especially regarding safety, ethics, and intelligent action.

As we move forward, a central question arises for every digital innovator: “Can we make AI not just intelligent, but impactful—not just digital, but physical?” This question underscores the importance of purpose and responsibility as AI systems begin to operate beyond virtual boundaries and directly affect the environment around us.